Discover why 95% of enterprise AI pilots fail to deliver ROI and learn the hybrid architecture approach that’s helping companies capture $4.4Trillion in AI value.

Executive Summary (TL;DR)

Here’s the uncomfortable truth about enterprise AI adoption: Adoption ≠ ROI. While 78% of organizations now use AI in at least one business function, only a tiny minority describe their deployments as mature and scaled. You’re not alone in this enterprise AI adoption struggle—but you can’t afford to stay stuck.

- The pilot purgatory is real. A recent MIT study found 95% of enterprise gen-AI pilots fail to deliver measurable P&L impact—mostly due to integration, data, and governance gaps, not model capability. Meanwhile, 42% of companies scrapped most AI initiatives in 2025, up from 17% in 2024. That’s not a trend; it’s a crisis.

- Root cause: Most companies try to jam probabilistic AI into deterministic, rule-bound systems—or rip and replace the deterministic core altogether. Neither works. Forrester expects gen-AI will orchestrate less than 1% of core processes in 2025; rules still run the backbone.

- What actually works: A hybrid architecture—probabilistic intelligence inside deterministic guardrails—paired with explainability, TRiSM-style (AI Trust, Risk and Security Management) governance, and workflow redesign.

- Sales enablement starts with CPQ—the model use case: The category is growing fast (projected to reach ~$7.3B by 2030) and the value accelerates when probabilistic guidance runs inside deterministic guardrails—proof at the edge: Manitou cut ordering time from 30 days to 1 day.)

- The macro upside is real—but only for operators who integrate: McKinsey still sizes gen-AI’s potential at $2.6–$4.4T/year; Goldman estimates a ~7% lift to global GDP over a decade, while its 2024 follow-up stresses near-term gains hinge on deployment into workflows, not experiments.

The Great Enterprise Divide: Why Your AI Programs Keep Stalling

Picture this: You’re running two incompatible operating systems in the same company, and wondering why nothing works smoothly.

Your deterministic backbone: ERP, financial controls, compliance, SoX/SOC auditable rules. Every decision must be explainable, repeatable, and governed. This is your company’s nervous system—mess with it carelessly, and you’re in regulatory hot water.

Your probabilistic promise: AI models produce likelihoods—churn risk 0.85, failure risk 0.37—not certainties. They’re superb at pattern recognition, less reliable at traceable, rule-justified actions that auditors love.

The failure mode isn’t replacement—it’s variance leakage. A “perfect” pricing/billing engine (deterministic) × an ~85% confidence recommender (probabilistic) ≠ perfect outcomes; small errors compound into disputes and rework. That’s why gen-AI doesn’t orchestrate the core; deterministic automation still does. (Forrester: “GenAI will orchestrate <1% of core business processes … RPA (Robotic Process Automation)/rules orchestrate the core.”)

Translation: You need both systems working together, not fighting each other. And you need to architect that interaction deliberately.

Why So Many AI Projects Fail (Spoiler: It’s Not the Models)

Let’s get specific about what’s actually breaking your AI initiatives:

Data Trapped in Deterministic Silos

Your ERP and financial systems are pristine for transactions—terrible for context. When you feed uncontextualized, siloed data into probabilistic models, you torpedo both relevance and explainability. The latest MIT Media Lab analysis reports 95% of pilots show no measurable ROI, largely due to integration and workflow issues—not model performance.

Think about it: Your AI is only as smart as the data it can access. If that data lives in disconnected systems with no business context, you’re asking your AI to perform miracles with incomplete information.

Governance and Explainability Gaps

Here’s a conversation every CEO should dread: “Why did our AI approve this discount?” followed by silence. Auditors, boards, and regulators require traceability. Gartner’s AI TRiSM guidance ( AI trust, risk and security management-AI TRiSM)) emphasizes model transparency, runtime inspection, and policy enforcement. If you can’t explain why a decision was made, it won’t survive audit—or worse, regulatory scrutiny.

Tools like IBM AI Factsheets formalize model lineage, data provenance, and decision trails. But most companies treat explainability as an afterthought, not a design principle.

The Integration Cliff

Even when pilots “work” in controlled environments, they often die in production. In 2025, 42% of companies abandoned most AI initiatives, with 46% of POCs scrapped before scale, per S&P Global Market Intelligence. Top reasons: escalating costs, data privacy concerns, and missing operational controls.

This isn’t a technology problem—it’s an architecture problem. You’re trying to bolt AI onto systems that weren’t designed for it.

Leadership Readiness (The Real Bottleneck)

McKinsey’s research is eye-opening: almost all companies are investing in AI, but only roughly 1% say they’re mature. The blocker isn’t front-line employees resisting change—it’s leadership readiness and operating-model transformation.

Consider IgniteTech’s controversial approach: CEO Eric Vaughan mandated “AI Mondays,” and over time replaced roughly 80% of staff who resisted the change. You don’t need to agree with his methods to recognize the cultural headwinds leaders face when AI moves from demo to daily operations.

The uncomfortable truth: Your AI strategy is failing because you’re treating it like a technology upgrade instead of an operating model transformation.

The Hybrid Model: Making Probabilistic + Deterministic Actually Work

Stop thinking “AI instead of the process.” Start thinking “AI inside the process.” Here’s the architecture that actually works:

Deterministic guardrails: Business rules, policies, margin floors, regulatory caps, approval workflows. These are your non-negotiables—the boundaries within which AI can operate safely.

Probabilistic intelligence: Recommendations with confidence scores, ranked options, anomaly flags, and predicted outcomes. This is where AI adds value—pattern recognition, optimization, and intelligent suggestions.

Governance fabric: Explainability frameworks, model cards, runtime monitoring, audit trails, and AI TRiSM to enforce constraints. This is what keeps you out of regulatory trouble and board-level embarrassment.

Where it shines first: Places where humans already blend judgment with rules—pricing decisions, risk assessment, service entitlements, and Configure-Price-Quote (CPQ) processes.

CPQ: Your Real-World Proving Ground for Hybrid AI

Why start with CPQ?

Because product complexity, pricing policy, discount thresholds, and delivery feasibility collide in real time. That makes CPQ the ideal proving ground for hybrid AI—AI guidance operating inside deterministic guardrails.

Market signal (what’s happening):

Independent tracking projects CPQ to reach ~$7.3B by 2030 (from ~$2.5B in 2023), reflecting rising selling complexity across subscription/usage models, channel mixes, and compliance needs.

Hard outcomes (what hybrid delivers):

Manitou Group reduced order/quote time from ~30 days to ~1 day with a modern CPQ—evidence of cycle-time compression when AI-driven guidance runs inside rules.

How the Hybrid Works in Practice

- Deterministic layer: Enforce non-negotiables—compatibility rules, regulatory constraints (HIPAA compliance, for example), cost-to-serve calculations, minimum margins, required approvals. These are your safety rails.

- Probabilistic layer: Suggest attach options based on similar deals, recommend service tiers using pattern analysis, forecast churn risk at the account level, propose price ranges with confidence intervals. This is your competitive advantage.

- Decision pattern: AI proposes, rules constrain, humans approve—with a complete audit trail for every step. Simple, explainable, scalable.

Result: Faster, compliant quotes; higher win rates through relevant recommendations; margin protection through rule-enforced floors. Most importantly: measurable business impact.

The Prize: Why Getting the Blend Right Changes Everything

Firm-level value (what actually drives EBIT):

Organizations see returns when they rewire workflows and governance—not from model demos. McKinsey’s 2025 survey finds workflow redesign is the single biggest driver of EBIT impact from gen-AI.

Macro signal (where the money is flowing):

Hyperscalers are in a full sprint: Goldman Sachs Research now projects $736B in combined capex across the five largest U.S. hyperscalers in 2025–2026, alongside data-center power demand rising ~50% by 2027. That’s acceleration at infrastructure scale.

Acceleration factor (why your systems feel “maxed out”):

Training compute for notable AI models has been growing at roughly 4–5× per year (≈ 5–6 month doubling), which is outpacing how fast most enterprises can change core systems. The result: velocity at the edge, bottlenecks in the backbone—unless you design for hybrid.

Reality check (governance still rules the core):

Forrester’s 2025 view: gen-AI will orchestrate <1% of core processes this year; rules/RPA remain the primary orchestrators of mission-critical flow. Translation: use AI inside deterministic guardrails, not in place of them.

So what?

Operators who redesign processes and govern AI capture value; demo-driven adopters do not. The difference is execution—and a hybrid architecture (AI proposes → rules constrain → humans approve) that lets you ride the acceleration safely.

Your Multi Phase Action Plan -From Pilot to Production

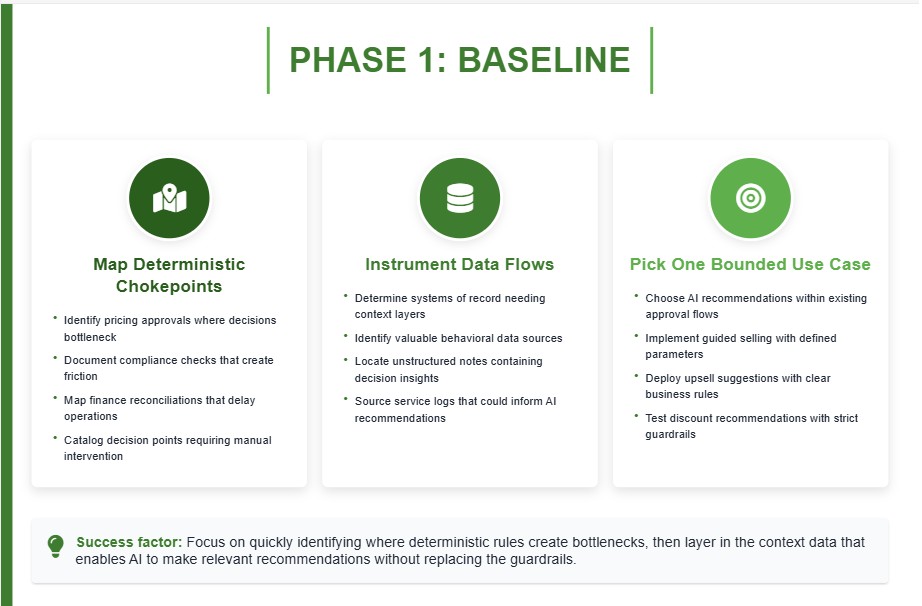

*Phase 1 — Baseline and “Where to Blend”

Map deterministic chokepoints: Identify pricing approvals, compliance checks, finance reconciliations where decisions currently bottleneck.

Instrument data flows: Determine which systems of record need context layers—behavioral data, unstructured notes, service logs that could inform AI recommendations.

Pick one bounded use case: Choose where AI can recommend within an existing approval flow—guided selling, upsell suggestions, discount recommendations with guardrails.

*Phase 2 — Design the Hybrid Architecture

Encode the rules + safety checks (policy-as-code).

Transform margin floors, caps, and exception routes into executable rules and add validation gates so probabilistic outputs can’t corrupt deterministic ledgers. Minimum set:

- Catalog reality checks: Suggestions must reference valid, active SKUs/Service IDs (region, availability).

- Compatibility & entitlement: Enforce BOM rules, license pre-reqs, serviceability, and customer entitlements.

- Pricebook integrity: Pull base prices only from approved pricebooks (effective dates, currency, taxes/fees).

- Margin protection & discounts: Line/bundle/deal margin floors; role/segment discount caps and exception routes.

- Deterministic pre-filters: AI can choose within valid catalog/pricebook/policy scopes (no “phantom” items).

- Confidence thresholds: Advisory by default; auto-add/auto-price only when confidence ≥ agreed threshold and rules pass.

- Reconciliation test: Deterministically recompute the quote; block if recompute ≠ presented (prevents quiet drift).

- Hallucination catchers: Require source table/ID references; drop unseen IDs or off-book prices.

- Kill-switch: One-click “rules-only” fallback per domain (pricing, bundling, terms).

Design explainability upfront.

Define what’s logged in factsheets and audits from day one: inputs, features, rationale, model/version, rules hit/blocked, confidence, approver, outcome. Add TRiSM-style controls: runtime policy enforcement, drift monitors, access control, immutable logs, PII/PCI handling.

Define decision rights.

Clarify when AI advises vs. decides, and the triggers for human-in-the-loop review (e.g., sub-margin quotes, high discount, regulated products). Document approval semantics, confidence bands, and SLAs in a RACI.

Test strategy before any automation.

- Unit tests for rules (floors, caps, approvals) with 100% coverage of critical controls.

- Negative tests (non-existent SKU, expired price, over-discount, out-of-region service).

- Canary/shadow runs (2–4 weeks advisory-only) to measure override rate, margin deltas, and error catches.

- Regression & drift checks weekly; auto-revert on threshold breach.

Month-end deliverables.

Rule catalog (human-readable) + policy-as-code repo (versioned); safety test suite with CI; factsheet/audit schema; runbook for kill-switch, rollback, and approvals.

Net effect: AI proposes, rules constrain, humans approve—and every suggestion is real, priced, compliant, and explainable before it hits the quote.

*Phase 3 — Launch and Learn

Ship to production in one domain: Start with CPQ attach recommendations, measure both business KPIs (win rate, cycle time, margin protection) and model KPIs (precision, recall, override rate, drift detection).

Create feedback loops: Feed outcomes back into both the model and the business rules. Make the system smarter over time.

Scale only when controls hold: Expand only when explainability and governance survive audit scrutiny.

This approach directly addresses the S&P Global reality of high failure rates with a production-first posture that blends rules and probabilistic guidance—dramatically reducing the chance your initiative becomes part of the 42% abandoned in 2025.

Guardrails That Keep You Out of Headlines

AI TRiSM (Gartner framework): Establish policies, runtime inspection, content anomaly detection, access control, and continuous validation. Make governance a feature, not an afterthought.

Model transparency (IBM Factsheets approach): Track purpose, data lineage, model versions, parameters, and approvals—so every pricing decision or risk assessment is explainable to auditors and regulators.

Approval semantics: Document when AI suggestions are advisory versus binding; require confidence thresholds and rule conformance for automated actions. Clarity prevents chaos.

A Note on Culture and Change Management

Culture drives outcomes more than technology. The IgniteTech story—mandated “AI Mondays” followed by significant workforce changes—shows how volatile transformations can become when change management is mishandled.

Your goal isn’t shock therapy; it’s capability building with accountability. That means clear incentives, safe experimentation environments, and enterprise-grade guardrails that protect both the business and employees.

Successful transformation requires: Transparent communication about AI’s role, comprehensive retraining programs, and career development pathways that show how AI enhances rather than replaces human judgment.

Why servicePath™ for Hybrid CPQ Excellence

If you sell complex technology solutions—ISPs, MSPs, telco, VAR/SI—you operate exactly where configuration complexity, pricing risk, and compliance requirements intersect. This is precisely the intersection the hybrid model is designed to optimize.

What servicePath™ brings to your transformation:

- Product catalogs are bigger and change faster than ever. You need guardrails to keep rules and logic in sync and to return accurate pricing at the point of quote—this is exactly what AI-native CPQ is built for.

- Deterministic accuracy your CFO and auditors trust: Pricing rules, margin floors, approval workflows, legal language, cost-to-serve calculations—all auditable and explainable.

- Probabilistic intelligence your sales teams love: Guided selling, intelligent attach recommendations, pattern-based suggestions, predicted discount sensitivity—all with confidence scores.

- No-code agility: Adapt pricing and configuration logic without IT bottlenecks or development cycles.

- Enterprise-grade integrations: Seamless connectivity with Dynamics 365, Salesforce, NetSuite, and other critical business systems.

What customers consistently see:

- Faster, compliant quotes with auditable governance built-in

- Fewer reworks and undeliverable configurations

- Higher attach rates and deal sizes through relevant, explainable guidance

- Measurable improvement in sales cycle efficiency

Ready to de-risk your AI in revenue operations?

Book a strategic consultation: https://servicepath.co/contact/ (Speak directly with a CPQ+ architect about your rule stack, data flows, and AI guidance patterns)

The Technology Architecture That Actually Works

Building a hybrid AI system isn’t just about connecting APIs—it requires thoughtful architecture that balances innovation with operational stability. The most successful implementations follow a layered approach that separates concerns while enabling seamless integration.

The Data Layer: Your foundation must handle both structured transactional data and unstructured contextual information. Modern AI implementations leverage cloud-native data lakes that can ingest, process, and serve data in real-time while maintaining strict governance controls. Companies that invest in robust data architecture early consistently outperform those attempting to retrofit data capabilities around AI applications.

The Intelligence Layer: This is where probabilistic models live—recommendation engines, pattern recognition systems, predictive analytics. But here’s the key: these models must be designed for explainability from day one. Black-box systems might work in consumer applications, but enterprise environments demand transparency at every decision point.

The Rules Layer: Your deterministic backbone—business logic, compliance requirements, approval workflows. This layer acts as both guardrails and governance, ensuring AI recommendations align with business policies and regulatory requirements. The most successful implementations treat this layer as a competitive advantage, not a constraint.

The Integration Layer: APIs, microservices, and event-driven architectures that enable real-time communication between layers. This is where many implementations fail—underestimating the complexity of enterprise integration and the performance requirements of real-time decision-making.

The Governance Layer: Monitoring, auditing, and control systems that ensure AI operates within defined parameters. This includes model performance tracking, bias detection, drift monitoring, and compliance reporting. Organizations that treat governance as an afterthought consistently struggle with scaling AI initiatives.

Organizational Transformation: Beyond Technology

The human dimension of AI implementation often determines success more than technical considerations. Organizations that successfully deploy AI at scale invest heavily in change management, training, and cultural transformation initiatives that prepare their workforce for AI-augmented operations.

Leadership Alignment: Success requires more than executive sponsorship—it demands active participation in AI governance, strategic decision-making, and cultural transformation. The most effective leaders become AI-literate themselves, understanding both capabilities and limitations well enough to make informed strategic decisions.

Skills Development: Training programs must address both technical competencies and conceptual understanding. Employees need to understand how to collaborate effectively with AI systems while maintaining critical thinking and decision-making capabilities. The most successful programs combine hands-on experience with theoretical knowledge, enabling teams to leverage AI while recognizing its boundaries.

Cultural Evolution: Resistance to AI adoption typically stems from fear of job displacement rather than technical complexity. Successful organizations address these concerns proactively through transparent communication, comprehensive retraining programs, and clear career development pathways that demonstrate how AI enhances rather than replaces human capabilities.

Performance Metrics: Traditional KPIs often fail to capture AI’s impact. Organizations need new metrics that measure both efficiency gains and quality improvements. This includes tracking recommendation acceptance rates, decision accuracy, time-to-value, and user satisfaction alongside traditional business metrics.

Risk Management in AI Implementation

Enterprise AI deployment introduces new categories of risk that traditional IT risk frameworks may not adequately address. Successful organizations develop AI-specific risk management strategies that address technical, operational, and strategic concerns.

- Model Risk: AI models can degrade over time due to data drift, changing business conditions, or adversarial inputs. Robust monitoring systems must track model performance continuously and trigger retraining or intervention when performance degrades below acceptable thresholds.

- Data Risk: AI systems are only as good as their training data. Poor data quality, bias, or privacy violations can create significant liability. Organizations need comprehensive data governance frameworks that address collection, storage, processing, and retention throughout the AI lifecycle.

- Operational Risk: AI systems can fail in unexpected ways, potentially disrupting critical business processes. Contingency planning must include fallback procedures, manual overrides, and rapid response protocols for AI system failures.

- Regulatory Risk: The regulatory landscape for AI is evolving rapidly. Organizations must stay current with emerging regulations while building systems that can adapt to changing compliance requirements. This includes implementing audit trails, explainability features, and governance controls that satisfy current and anticipated regulatory demands.

- Competitive Risk: AI capabilities can become competitive advantages—or disadvantages. Organizations that fall behind in AI adoption may find themselves at a significant competitive disadvantage, while those that move too quickly without proper controls may face operational or regulatory challenges.

Executive FAQs: The Questions Your Board Is Asking

1) “If AI is so powerful, why is my ROI still elusive?”

Because integration and governance, not modeling capability, are the actual bottlenecks. The MIT finding that 95% of pilots show no measurable P&L impact underscores that value comes from operational integration—workflows, data architecture, controls—not impressive demos. Companies abandoning projects consistently cite costs, privacy concerns, and governance gaps as primary reasons.

The uncomfortable truth is that most organizations treat AI as a technology problem when it’s actually an operating model challenge. You’re not just implementing software—you’re redesigning how work gets done, how decisions get made, and how value gets created.

2) “Is the failure rate improving or getting worse?”

Worse in 2025. 42% of companies scrapped most initiatives, up from 17% in 2024; nearly half of POCs are abandoned before production. The fix is starting inside existing rule-based processes (CPQ, pricing, risk assessment) and adding AI where it can recommend safely—then scaling deliberately.

This trend reflects a maturation in the market—organizations are becoming more realistic about AI’s limitations and more demanding about measurable business outcomes. The companies succeeding are those that approach AI implementation with the same rigor they apply to other major operational transformations.

3) “Why not replace our deterministic systems with AI agents?”

Because rules still run the core business. Forrester expects gen-AI to orchestrate less than 1% of core processes this year. Regulators and auditors require explainability and control that pure AI systems can’t provide. The winning pattern is hybrid: AI proposes, rules constrain, humans approve, and every step is logged.

Think of it this way: your deterministic systems are your company’s constitution—they encode the fundamental rules by which you operate. AI is more like an advisor—brilliant at pattern recognition and optimization, but not equipped to make constitutional decisions about how your business should operate.

4) “Where should we focus for a sensible first win?”

Configure-Price-Quote (CPQ) processes. They’re rule-heavy (perfect for deterministic governance) and data-rich (fertile ground for AI recommendations). The CPQ market’s growth trajectory and case studies like Manitou’s 30-to-1-day cycle-time improvement show the clear path from proof-of-concept to profit.

CPQ is ideal because it combines complexity with clear business value. Sales teams understand the pain points, finance teams can measure the impact, and IT teams can implement solutions within existing system architectures. Success here creates momentum for broader AI initiatives.

5) “Is the macro upside real—or already overhyped?”

The upside is very real—if you integrate properly. McKinsey pegs $2.6–$4.4T annually in enterprise impact; Goldman Sachs projects roughly 7% global GDP over ten years. But these are operator outcomes, not demo outcomes. They require re-platforming workflows and governance, not just purchasing licenses.

The key word is “if.” The macro projections assume widespread, effective integration—not just adoption. The organizations that capture this value will be those that treat AI as a fundamental business transformation, not a technology upgrade.

6) “How do we measure success beyond traditional ROI?”

AI success requires new metrics that capture both efficiency and effectiveness improvements. Track recommendation acceptance rates, decision accuracy, time-to-insight, and user satisfaction alongside traditional financial metrics. The most successful implementations show improvements across multiple dimensions—faster decisions, better outcomes, and enhanced employee satisfaction.

7) “What’s our biggest risk if we don’t act?”

Competitive displacement. AI is becoming a competitive necessity, not just an advantage. Organizations that fall behind in AI capabilities may find themselves unable to compete on speed, accuracy, or cost-effectiveness. The window for deliberate, strategic AI adoption is narrowing as market leaders establish AI-driven competitive moats.

Implementation Lessons from the Field

Real-world AI implementations reveal patterns that separate successful deployments from expensive failures. These lessons, gathered from organizations across industries, provide practical guidance for executives navigating their own AI transformations.

Start Small, Think Big: The most successful AI implementations begin with narrow, well-defined use cases that deliver clear business value. Organizations that attempt enterprise-wide AI transformations from day one consistently struggle with complexity, integration challenges, and change management issues. Instead, identify a single process where AI can provide immediate value—then use that success to build momentum for broader initiatives.

Data Quality Trumps Model Sophistication: Advanced AI models cannot compensate for poor data quality. Organizations that invest in data cleansing, standardization, and governance before implementing AI consistently outperform those that rush to deploy sophisticated models on messy data. The most successful implementations spend 60-70% of their effort on data preparation and only 30-40% on model development and deployment.

Governance Is a Competitive Advantage: Organizations that treat AI governance as a constraint consistently struggle with scaling initiatives. Those that design governance into their AI systems from the beginning find it becomes a competitive advantage—enabling faster deployment, better risk management, and stronger stakeholder confidence. Governance frameworks should address model development, deployment, monitoring, and retirement throughout the AI lifecycle.

User Adoption Drives Value: The most sophisticated AI system delivers no value if users don’t adopt it. Successful implementations prioritize user experience, training, and change management alongside technical development. This includes designing intuitive interfaces, providing comprehensive training, and creating feedback mechanisms that allow users to improve system performance over time.

The Economics of AI Implementation

Understanding the true cost and value of AI implementation helps executives make informed investment decisions and set realistic expectations for returns.

Total Cost of Ownership: AI implementations involve more than software licensing costs. Successful organizations budget for data infrastructure, integration work, training, change management, and ongoing maintenance. Industry analysis suggests budgeting $3-5 for implementation and operations for every dollar spent on AI software.

Value Realization Timeline: AI value typically follows a J-curve—initial investments exceed returns as organizations build capabilities. Most successful implementations show positive ROI within 12-18 months, with significant acceleration in years 2-3 as capabilities mature and scale.

Portfolio Approach: Rather than betting everything on a single AI initiative, successful organizations build portfolios of AI investments with different risk profiles and timelines. This reduces overall risk while increasing the likelihood of breakthrough successes.

Building AI-Ready Organizations

Organizational readiness often determines AI success more than technical capabilities. Companies that successfully scale AI share common characteristics that enable effective adoption and value realization.

- Executive Literacy: Successful implementations require executives who understand AI capabilities and limitations well enough to make informed strategic decisions. This means sufficient understanding to evaluate proposals, allocate resources effectively, and provide meaningful oversight.

- Cross-Functional Collaboration: AI initiatives that remain siloed within IT consistently struggle to deliver business value. Successful implementations involve close collaboration between business stakeholders, technical teams, and operational staff throughout development and deployment.

- Continuous Learning Culture: AI technologies evolve rapidly, requiring organizations to continuously update capabilities and approaches. Companies that foster cultures of experimentation and adaptation consistently outperform those that treat AI as a one-time implementation project.

The Future of Hybrid AI Systems

The hybrid approach represents more than a temporary solution—it’s likely to become the dominant architecture for enterprise AI systems as organizations balance innovation with operational stability.

- Regulatory Evolution: As AI regulations mature, hybrid architectures combining explainable deterministic systems with AI capabilities will likely become compliance requirements rather than best practices. Organizations building hybrid systems today position themselves advantageously for future regulatory requirements.

- Technology Convergence: Emerging technologies like edge computing and quantum computing will enhance hybrid AI capabilities while maintaining governance and explainability requirements for enterprises.

- Competitive Dynamics: As AI becomes commoditized, competitive advantage will shift from having AI to having AI deeply integrated with core business processes and protected by deterministic guardrails that maintain operational control.

Final Word: From Strategy to Execution

The question isn’t whether you’ll need to blend probabilistic and deterministic systems—it’s how quickly you can do it safely and effectively. Companies winning with AI treat it as fundamental operating model upgrade, not a simple feature addition or technology bolt-on.

Start where the rules already live: CPQ, pricing, risk assessment. Layer explainable guidance on top. Enforce guardrails religiously. Measure business outcomes, not just model metrics. Then scale deliberately.

The $4.4 trillion opportunity is real, but it belongs to operators who integrate AI into their business processes, not to organizations that treat AI as a technology experiment. Success requires treating AI implementation as a fundamental business transformation that touches strategy, operations, culture, and governance.

The organizations that capture AI’s full value will be those that master the hybrid approach—combining the pattern recognition power of probabilistic systems with the reliability and explainability of deterministic processes. This isn’t just about technology architecture; it’s about building organizations that can harness AI’s potential while maintaining enterprise control and governance.

And if quote-to-cash is your bottleneck, servicePath™ CPQ+ is purpose-built for this exact hybrid approach—turning AI from an impressive demo into dependable gross margin improvement.

The Hybrid Path to Enterprise AI Value

The lesson is clear: AI pilots keep failing not because the models are weak, but because integration, governance, and operating models are neglected. By contrast, hybrid architectures—where probabilistic AI operates inside deterministic guardrails—deliver measurable impact: faster quotes, higher win rates, and stronger margin protection.

Strategic Reality for 2025–2026

- Failure is the default: 95% of pilots collapse before ROI.

- Governance is non-negotiable: Regulators, boards, and auditors demand explainability and auditability.

- Advantage is shifting: Competitors that integrate AI with guardrails are capturing cycle-time and revenue gains today.

- Leadership is decisive: Executives who rewire workflows for hybrid adoption will own the market.

Your Next Strategic Challenge: Execution at Scale

Winning with AI isn’t about demos or proofs of concept. It’s about disciplined execution:

- Redesigning workflows to integrate AI into mission-critical processes

- Encoding rules, compliance, and margin protections from day one

- Building explainability into every decision to survive audit and regulatory scrutiny

- Driving adoption through role-specific playbooks, training, and incentives

Ready to Capture Your Share of the $4.4T Opportunity?

The window for enterprise AI leadership is narrowing fast. Organizations that act now will build durable competitive advantages—while others remain stuck in “pilot purgatory.”

Take Action with servicePath™:

- Executive Demo — See AI guidance operating seamlessly inside deterministic CPQ guardrails

- Strategic Consultation — Work with CPQ+ architects on your governance, data, and workflow blueprint

- Proven Outcomes — Learn how peers compressed cycles from 30 days to 1, while protecting margins

servicePath™ combines 14+ years of CPQ expertise with Gartner-recognized innovation, helping technology enterprises turn AI from a risky experiment into a governed revenue engine.

The choice is clear: Operators who master hybrid AI will own the future

Sources & Further Reading

- Adoption & maturity: McKinsey State of AI; McKinsey Superagency

- Failure/abandonment: MIT/NANDA; S&P Intelligence

- Process orchestration: Forrester Predictions

- Governance: Gartner AI TRiSM; IBM Factsheets

- CPQ market: ResearchAndMarkets (~$7.3B); PROS case study

- Economic impact: McKinsey gen-AI value; Goldman Sachs GDP projections